Caching for WordPress with Varnish

AKA “How to use Varnish like a king”

Angry Creative provides its own hosting to make sure we can provide the most value and minimise issues in our projects and ongoing work with our clients. In this article, our infrastructure expert Toni Cherfan shares how we configure Varnish caching to work with WordPress and WooCommerce.

Why do it?

Computing capacity is and will always be a limited resource. Back in the 1960s during NASA’s Apollo programme that took the US to the moon, the Apollo Guidance Computer (AGC) experienced two “program alarms”, namely 1201 (Executive Overflow – No core sets) and 1202 (Executive Overflow – No VAC areas). They were caused by the astronauts leaving the radar in SLEW mode, which flooded the AGC with interrupt signals that prevented it from performing all the tasks it needed to: it simply couldn’t process the data it was receiving at a high enough rate to keep up. You can read more about it here.

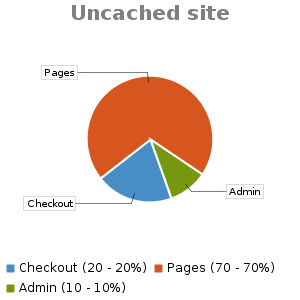

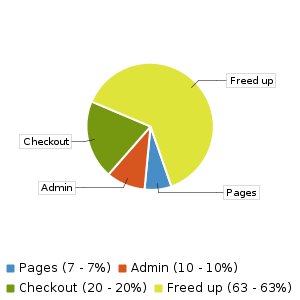

The consequence of limited computing capacity can be thought of as having a pool of resources. It is illustrated as a pie chart below.

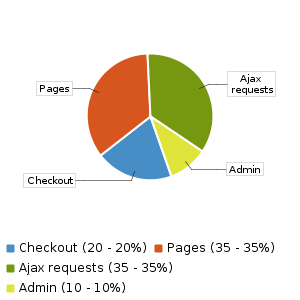

⚠️ These pie charts are completely arbitrary and do not represent any real data!

As a developer, it’s your responsibility to use these resources effectively. Varnish exists to help you with this task.

How to do it?

On an uncached site, the pie chart above is highly influenced by user traffic patterns, but also by design choices. For example, if you decide to load half of the page with AJAX, then the resource pool now looks like this:

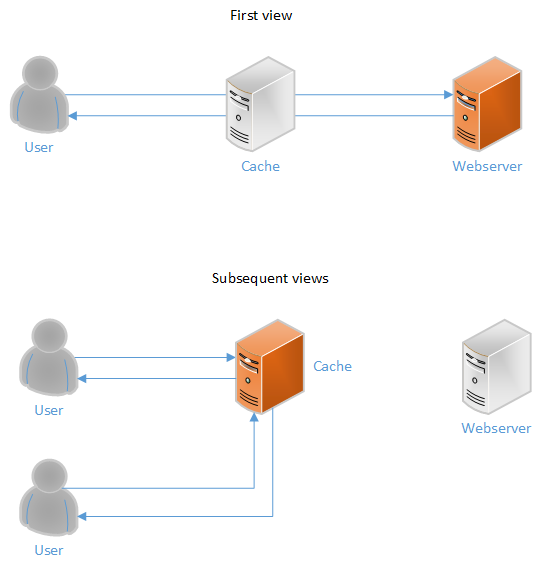

The purpose of an HTTP interface cache is to offload the backend by displaying the result of a request to multiple users.

- On the first page view, the cache is basically invisible. It looks up the request in the cache data store and finds nothing. The request is therefore sent to the backend web server, but on the way back to the user it is also stored in the cache data store.

- On subsequent requests for the same page, the cache looks up the request in exactly the same way as last time, but this time it finds an entry for the requested page. The response is therefore sent to the next user requesting the page and so on.

This means a significant saving in resources because instead of having to analyse server-side scripts, make database queries and compile the page that has been requested, the cache simply re-serves the pre-compiled page. That’s the difference between having to run a programme and just serving a static file; a huge reduction in needed computing resources.

But of course there are many situations where serving exactly the same page to multiple users can be bad. Let’s look at that.

Handling with cookies

The purpose of cookies is to influence the behaviour of the server when creating responses. In the case of user sessions, the cookie contains a unique identifier for the logged-in user. By definition, this means that a response requested with a cookie cannot be sent to multiple users because it was generated for a single user. A request with a cookie may therefore seem unachievable.

In the real world, all sorts of things set cookies. One such example is Google Analytics and other tracking software. It is therefore inappropriate to assume that just because a request contains cookies, it is also uncookable. One solution is to force the request to be cacheable by removing the user-specific parts of the request, or in plain language: disabling all cookies. In the Varnish Configuration Language (VCL) code, this is done with something like:

sub vcl_recv

{

unset req.http.Cookie;

}

sub vcl_backend_response

{

unset beresp.http.Set-Cookie;

}So what is the problem with applying the above? The site would not be able to generate user-specific content! This is almost fine on a CMS site, with the exception that you would not be able to use admin because admin requires sessions. On an e-commerce site, the problem gets much worse because the shopping basket and checkout are by definition user-specific. They would not work without cookies. Therefore, we need a mechanism to make exceptions to this. This is addressed in the VCL section below.

🚨 Some REALLY bad caching implementations ignore cookies present in the request and cache the response anyway. The result is a total disaster where the admin, checkout and shopping cart can be cached with data from other users. 🚨

⚠️ There are actually lots of different ways to handle cookies. For example, Varnish’s default implementation is to just skip caching requests with cookies in them and you could in theory create a dynamic way to handle such requests by receiving a header from the backend indicating whether the page is uncacheable or not, restarting the request pipeline, and specifying the currently processed URL as a hit for pass items. There is no specific advantage to how we do things, it’s just a design decision.

What about speed?

A side effect of caching HTTP responses is that delivering a rendered response from memory is much faster than rendering that response from the backend. Computers are very good at copying data, and in the case of Varnish, we only need to copy a memory location to a network socket so that the browser can receive it. This is orders of magnitude faster than starting PHP, loading WP and reading through the site code. However, this is not our main goal!

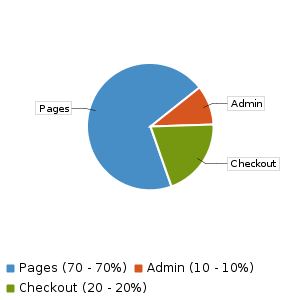

Our goal is to free up backend resources so they can be better used. Take our resource pool again:

We’ve already established in the cookie section above that the admin and checkout are not cacheable. This means that the pages are cacheable. Assuming a 90% hit rate on the pages, this would result in a new resource pool as follows:

This is what we want to achieve!

In this example, we can now increase visitor capacity by more than double and still have more space left for other things. Varnish has enabled us to do more with less.

⚠️ This guide is mostly aimed at developers, not marketing as such! In the marketing world, it’s much easier to sell Varnish as something that speeds up your site rather than something that gives you better resource utilisation. However, this is just a side effect albeit a valuable one!

A few words about hashing

Think of the cache as a table of key-value pairs. The request is the key and the value is the content of the response. The request usually includes things like:

- HTTP scheme (is the request

httporhttps?) - HTTP method (a

GETrequest is different from aHEADrequest, which in turn is different from aPOST) - URL

- The domain name of the website

ℹ The so-called “query string” is a part of the URL that looks like this ?search=socks – it often looks something like this as part of a full URL: www.yoursite.com/?search=socks

But storing all this information in the key would result in lots of wasted space as just one URL itself can be up to 2048 bytes long! Therefore, the data is first passed through a hashing algorithm that is beyond the scope of this page (you can read about it here) and compressed to take up much less space. This is done in the vcl_hash (see below) and the hash_data() function which allows us to add more data to the hash key.

Varnish logic (VCL)

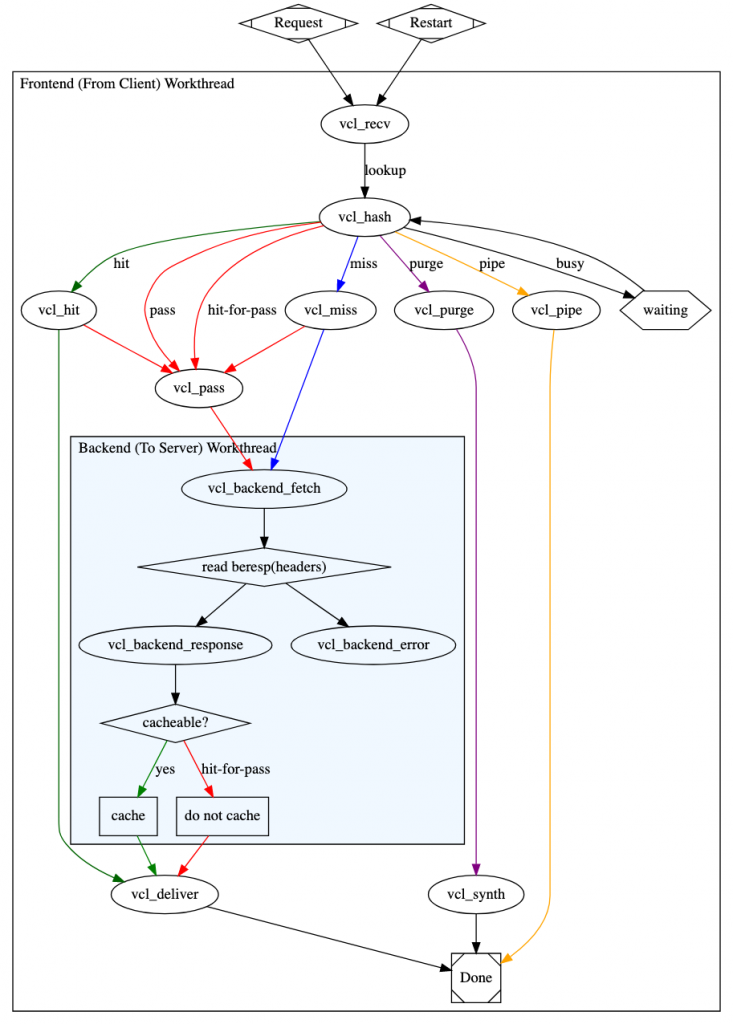

The Varnish Configuration Language (VCL) is a programming language in itself. The syntax and functions are beyond the scope of this document (you can read more about them here), but the concepts below should be illustrative enough to be read by most programmers.

To understand how Varnish does things and how Synotio’s default VCL works, we need to look at the Varnish request pipeline:

This looks complicated, but there are many steps we don’t need to bother with, that just happen automatically. Let’s start from the top:

vcl_recv

This step is called when the request is received by Varnish. It is at this stage that we decide whether the request should be cached or not.

Synotio’s default vcl_recv looks like this:

sub vcl_recv {

## Handle PURGE requests differently depending if we're purging an exact URL or a regex, set by the varnish-http-purge plugin

## See the purging section below for more information on this

if (req.method == "PURGE") {

if (req.http.X-Purge-Method ~ "(?i)regex") {

call purge_regex;

} elsif (req.http.X-Purge-Method ~ "(?i)exact") {

call purge_exact;

}

else {

call purge_exact;

}

return (purge); ## Terminate the request. There is no point in sending it to the backend since it's meant for varnish.

}

set req.backend_hint = cms_lb.backend(); ## Set the backend that will receive the request

set req.http.X-Forwarded-Proto = "https"; ## Force the backend to believe the request was served using HTTPS

if (req.url ~ "(wp-login|wp-admin|wp-json|preview=true)" || ## Uncacheable WordPress URLs

req.url ~ "(cart|my-account/*|checkout|wc-api/*|addons|logout|lost-password)" || ## Uncacheable WooCommerce URLs

req.url ~ "(remove_item|removed_item)" || ## Uncacheable WooCommerce URLs

req.url ~ "\\?add-to-cart=" || ## Uncacheable WooCommerce URLs

req.url ~ "\\?wc-(api|ajax)=" || ## Uncacheable WooCommerce URLs

req.http.cookie ~ "(comment_author|wordpress_[a-f0-9] |wp-postpass|wordpress_logged_in)" || ## Uncacheable WordPress cookies

req.method == "POST") ## Do NOT cache POST requests

{

set req.http.X-Send-To-Backend = 1; ## X-Send-To-Backend is a special variable that will force the request to directly go to the backend

return(pass); ## Now send off the request and stop processing

}

unset req.http.Cookie; ## Unset all cookies, see the "Dealing with cookies" section

}vcl_hash

This step is mostly unused by our systems, but there are a few unique cases where it is needed. Imagine you have a global store where you need to apply different VAT rates depending on where the visitor is located. In this case, we need to add data to the hash key to ensure that the visitor’s GeoIP country is taken into account as the backend will produce different responses based on that. For example:

sub vcl_hash

{

hash_data(req.http.X-GeoIP-Location); # We assume here that the visitor location is stored in a header called X-GeoIP-Location

}Without this, the view for the first user will be cached and sent out for all users. This has the potential to cache the wrong data if the backend creates different responses depending on variables that Varnish does not take into account.

🚨 This example is pretty bad due to cache fragmentation. There are 195 different countries in the world at the time of writing. Caching 195 different versions of the same URL would likely reduce your hit rate to almost nothing (see the TTL section for information on hit rates). In the real world, we would bind lists of countries to VAT percentages and hash them instead.

vcl_backend_response

This step is called after the request has been sent to the backend and the backend has responded. Events leading to this are either a return(pass); action in vcl_recv, or if the requested record was not found in the cache. The purpose is to set the cache TTL and do header processing.

Synotio’s default vcl_backend_responseslooks

sub vcl_backend_response {

if ( beresp.http.Content-Type ~ "text" )

{

set beresp.do_esi = true; ## Do ESI processing on text output. Used for our geoip plugin and a few others.

## See https://varnish-cache.org/docs/6.1/users-guide/esi.html

}

if ( bereq.http.X-Send-To-Backend ) { ## Our special variable again. It is here that we stop further processing of the request.

return (deliver); ## Deliver the response to the user

}

unset beresp.http.Cache-Control; ## Remove the Cache-Control header. We control the cache time, not WordPress.

unset beresp.http.Set-Cookie; ## Remove all cookies. See the "Dealing with cookies" section above

unset beresp.http.Pragma; ## Yet another cache-control header

## Set a lower TTL when caching images. HTML costs a lot more processing power than static files.

if ( beresp.http.Content-Type ~ "image" )

{

set beresp.ttl = 1h; ## 1 hour TTL for images

}

else {

set beresp.ttl = 24h; ## 24 hour TTL for everything else

}

}vcl_deliver

This step is called just before the response is delivered to the browser. It is used to perform post-processing after we have a response to send. Synotio’s default vcl_deliver looks like this:

sub vcl_deliver {

if (obj.hits > 0) { ## Add the X-Cache: HIT/MISS/BYPASS header

set resp.http.X-Cache = "HIT"; ## If we had a HIT

} else {

set resp.http.X-Cache = "MISS"; ## If we had a MISS

}

if (req.http.X-Send-To-Backend) ## Our special variable. Signifies a hardcoded bypass

{

set resp.http.X-Cache = "BYPASS"; ## If we had a BYPASS

}

unset resp.http.Via; ## Remove the Via: Varnish header for security reasons. We don't want to expose that we run Varnish.

unset resp.http.X-Varnish; ## Remove the X-Varnish header for security reasons. This would otherwise expose the Varnish version.

}Designing for caching

When writing code, you need to think about the audience your HTTP response is targeting:

- Is it aimed at a specific user? If so, it is uncacheable.

- Is it meant to be displayed to multiple users? If so, it is probably cacheable.

- Does it have any specific conditions attached to it that change the output based on those conditions? If so, it probably needs special configuration.

One nice thing about JavaScript is that it scales 100% linearly with the number of users on the site. Each browser adds additional local computational power, therefore you will never run into server load issues if your code runs in the browser. A good comparison between designing for caching and not doing it is a currency exchanger:

Case 1: The browser sends a cookie to the server with a session ID. The currency is stored in the PHP session and the site sets different pricing depending on what is stored in that session.

Result: This would make almost the whole site uncacheable as all pages with prices are expected to get user-specific data (the session cookie).

Case 2: The browser sends a cookie to the server with the selected currency in plain text (SEK/ZAR/USD/EUR/Other). The website reads the cookie and displays different pricing depending on what is stored in the selected currency cookie.

Result: This requires special configuration and is difficult to implement. Instead of deleting the cookies completely, we need to parse the cookie field, then delete all cookies in it except the currency exchange cookie in vcl_recv, then add the content of the result in vcl_hash while making sure that the content of this cookie reaches the backend. This is doable but error prone. Debugging this scenario can quickly become a nightmare. On top of that, the backend has to redo the processing for each instance of a new value for the currency exchange cookie. This adds additional server load as each page can now be rendered in X different ways, where X is the amount of possible currencies.

Case 3: The server outputs a JSON array with all prices on the page in the different currencies. The displayed price is selected in JavaScript based on a value in local storage (you can use cookies for this, just don’t read them from the backend). The page content remains the same from a Varnish perspective but the page is customised on load (in JavaScript) to suit the visitor. A nice side effect of this approach is that switching to a new currency would not require a page reload if implemented correctly. The server also doesn’t have to render the same page many times to output different currencies, which reduces the load.

Result: Awesome!

Troubleshooting cookies

Your main tool for debugging requests involving cookies with this configuration is the X-Cache header. If it is not present at all, the site does not seem to be currently cached with Varnish (configured as described in this article), you may need to check your server configuration.

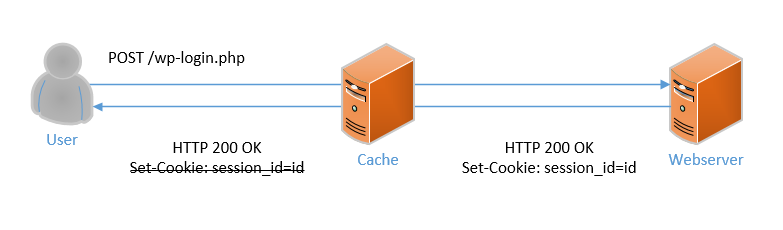

When troubleshooting, you need to know the URLs involved and the type of data to send back and forth. Take for example a website that always disables cookies.

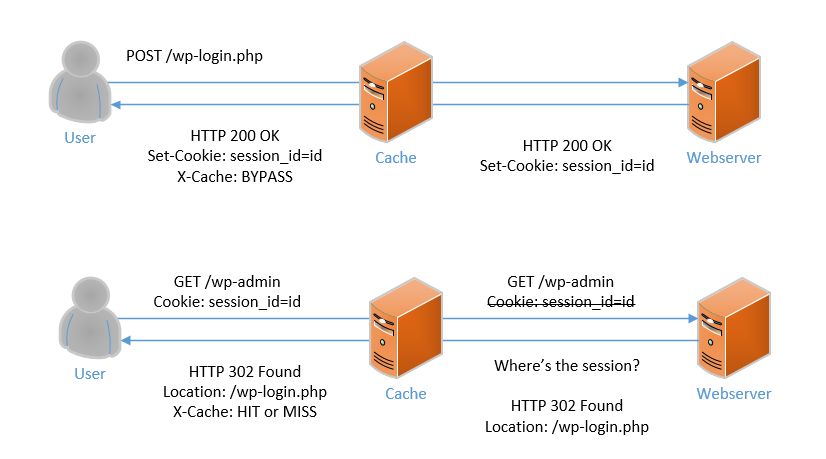

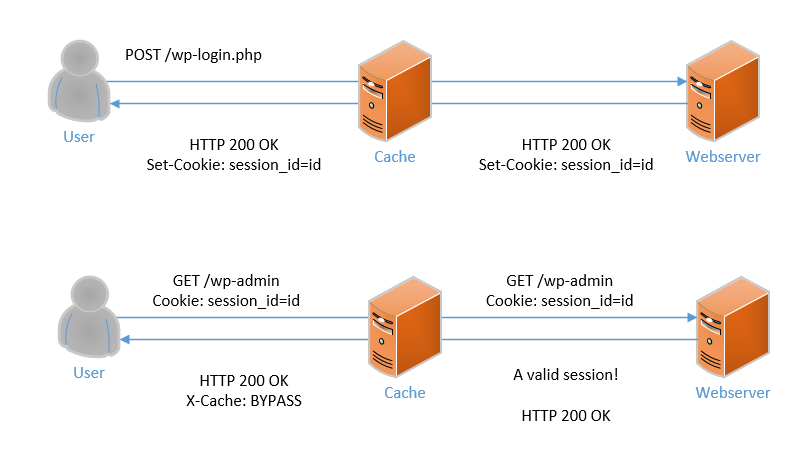

During login, a POST is sent to /wp-login.php with the user credentials. If the credentials are correct, the server must respond with a Set-Cookie header representing the user’s session ID. If we disable all cookies, the Set-Cookie header would be removed and the next URL would therefore not see any cookies from the browser that the backend just sent.

If we slightly modify the above scenario where we still disable cookies but only do so on GET requests, we get a different behaviour: After POSTing to /wp-login.php, the backend successfully sends a Set-Cookie header to the browser. The browser stores the cookie and sends it on the next request, which is a GET. The backend now responds with a redirect back to the login page because even if the browser sends the cookie, Varnish will delete it before sending the request to the backend. Remember that GET requests are cacheable by default and therefore cannot have user-specific data as cookies unless they are encoded in vcl_recv as such.

If we modify the above scenario again, but this time we code in an exception for wp-admin in vcl_recv, we get a working WP Admin. The POST to /wp-login.php sets a session identifier as a cookie. The browser receives the session identifier and proceeds with a GET request to /wp-admin containing the cookie. Varnish knows that this request is uncachable and therefore sends the request as is to the backend. The backend responds with the WP admin panel because the session identifier was valid.

We use the X-Cache header to represent the different scenarios:

- The output contains an

X-Cache:BYPASSheader: Varnish determined, usingvcl_recv, that the request was uncacheable and therefore should be sent as-is directly to the backend. - The output contains an

X-Cache:HIThvuud: Varnish determined, usingvcl_recv, that the request was cachebar and looked up the request in the cache. The result was found and served from caching without involving the backend. - The output contains an

X-Cache: MISSheader: Varnish determined, usingvcl_recv, that the request was cacheable and looked up the request in the cache. The result was not found and was retrieved from the backend and then stored in the cache. The next result will beHIT.

Most of the cases of debugging you encounter will likely be related to uncacheable requests being treated as cacheable when in fact they are not. Make sure you determine the above information as part of your debugging process. You can also manually set cookies in your browser that force a cache bypass, such as the wordpress_logged_in cookie. See the vcl_recv section above for possible cookie names.

TTL

ℹ A URL that has been stored in the Varnish cache is called an object.

TheTimeTo Live (TTL) value set in vcl_backend_response (see the VCL section above) sets how long objects will remain in the cache. It is possible for objects to be replaced prematurely if they are either banned (purged) or if there is no space in the cache and new objects need to be added. Varnish will prioritise which items to keep in storage based on how often they are used. Items that are requested more frequently will have a higher priority to remain in storage compared to less requested items.

When the TTL expires, the item is not immediately removed from the cache. Rather, it is marked as expired and will remain so until a new object can replace it. When an expired object is accessed during the lookup phase (see Varnish request pipeline above), there are various possible design patterns that can be applied:

- Fetch a new object from the backend to replace the expired object. This is how our Varnish implementation currently works under normal conditions.

- Serve the stale (expired) object but fetch the new object from the backend in the background. This allows Varnish to immediately deliver a request without having to wait for the backend. The downside of this method is obviously that we serve stale objects. We switch to this method if the Varnish health check has marked the backend as down. The alternative would be to serve an HTTP 503 instead.

It is possible to apply case 2 before the TTL has expired, for example if the remaining TTL for the object is less than 30 minutes. This is useful to allow Varnish to update objects in the background while respecting the TTL of the objects, and we may move to this method in the future.

The (almost) infinite TTL

The problem with low TTLs is obvious: they require us to fetch objects from the backend more often, which increases the load on the backend and reduces the efficiency of the cache. This efficiency is expressed as the hit rate, which is the percentage of how many requests in a given time frame have been served from the cache. A hit rate of 50% means that half of the requests were served from the cache and the other half were directed to the backend.

However, as you increase the TTL, the problem of serving stale data grows. This results in frustration for content editors because they now have to wait for the TTL to expire before users see their content – or even worse, users see products as in stock when they actually aren’t. This usually results in requests to lower the TTL or get rid of the cache entirely. We don’t want to do this.

What if we could have the best of both worlds? Synotio’s default TTL is 24 hours, but there are reasons to sometimes increase this further. It is therefore, for practical reasons, infinite. With such a long TTL, waiting for the object to decay is not an option. Therefore, we require work from the application layer (WordPress/WooCommerce) to address this issue.

Purging

There are only two hard things in data science: cache invalidation and naming things.

Phil Karlton

Cache invalidation, or purging, is the process of telling Varnish when the contents of a URL have been updated. This is the purpose of the varnish-http-purge extension. By automatically invalidating the relevant URLs, we achieve two things:

- TTL does not matter to see changes, as they will be displayed directly.

- We only need to clear parts of the cache, not the whole cache.

🚨 When users are given the option, they usually clear the entire cache to be sure that their content has been cleared. The action of clearing the entire cache has drastic performance costs and can even end up taking down the backend if the load is high enough as the backend will be bombarded with requests for object fetches when the cache data store is empty. In the case of large sites, it can take days or weeks for the cache to refill.

🚨 If you encounter stale data on the site, it’s tempting to use the “Clear” button in the admin. We do not recommend this, but see this as a problem that should be submitted as a support case so that it can be troubleshot and resolved.

The practical way to purge is to send an HTTP PURGE request as follows:

PURGE /my/example/resource HTTP/1.1

Host: example.com

X-Purge-Method: ExactThe above request will purge the URL /my/example/resource, and match the string exactly. The value of X-Purge-Method can be either exact or regex and is case insensitive. If this header is missing, vcl_recv will fall back to exact purge.

For regex queries, the request looks like this:

PURGE /my/example/.* HTTP/1.1

Host: example.com

X-Purge-Method: RegexThe above request will purge all URLs starting with /my/example/ from the cache.

The actual purge logic is implemented in the vcl_recv section above, but is repeated below for clarity with the inclusion of the called subroutines:

sub vcl_recv {

## Handle PURGE requests differently depending if we're purging an exact URL or a regex, set by the varnish-http-purge plugin

if (req.method == "PURGE") {

if (req.http.X-Purge-Method ~ "(?i)regex") { ## Check if X-Purge-Method matches "regex", case insensitive

call purge_regex;

} elsif (req.http.X-Purge-Method ~ "(?i)exact") { ## Check if X-Purge-Method matches "exact", case insensitive

call purge_exact;

}

else {

call purge_exact;

}

return (purge); ## Terminate the request. There is no point in sending it to the backend since it's meant for varnish.

}

}

sub purge_regex {

## Construct a ban (purge) command in this way:

## ban req.url ~ "/my/example.*" && req.http.host == "example.com"

ban("req.url ~ " req.url " && req.http.host == " req.http.host);

}

sub purge_exact {

## Construct a ban (purge) command in this way:

## ban req.url == "/my/example/resource" && req.http.host == "example.com"

ban("req.url == " req.url " && req.http.host == " req.http.host);

}Troubleshooting of purging

If the application does not send PURGE requests to Varnish on all actions that modify data in cacheable URLs, these objects will not be updated and will display stale data until the TTL of the involved objects expires. When troubleshooting this issue, there are two heads to help you:

X-Cache: HIT

For cached data to be stale, by definition it must have been stored in the cache in the first place. If you get any other value but the data is still stale, this is a sign of a problem with the backend, not Varnish.Age:The Age headerindicates how long (in seconds) the item has been stored. You should see this value increase on subsequent requests. This also allows you to determine how long the information has been out of date.

When you issue a PURGE for a URL – the next subsequent request for that URL will be a cache miss, setting the X-Cache header to MISS.

On high-traffic sites, there is a chance that someone has requested the URL between the time you cleared it and you were able to refresh your browser. In this case, your response will be a cache hit but the clear will still have been issued correctly. Therefore, look at the Age header before and after your scavenging action (whether it was manually initiated or not): it should go from a high value to a low value when scavenging because the item has been fetched more recently from the backend.

If the value of the Age header does not drop when a purge action is initiated, it is a sign that the application is either not trying or failing when trying to send PURGE requests to Varnish.

Edge Side Includes (ESI)

ℹ The official Varnish documentation on ESI is available here. It is highly recommended that you read it before making ESI implementations.

Varnish provides the possibility to include the content of other URLs by analysing the content of the response if beresp.do_esi = true (see vcl_backend_response in the VCL section). Take this content for example:

The content of /README.txt will be included in the body block.

ESI can also delete data!

🚨 Although ESI can remove data, don’t use it for sensitive stuff. Any instance where the page is rendered without ESI support will immediately expose it.

This is a secret message that the browser will never see if ESI support is enabled :)

Hello!

Once processed by Varnish, the above will output:

Hello!

You can also test whether ESI support is available or not. This is useful to check backup behaviour. Let’s take this for example:

var is_esi_supported = false;

<!--esi

var is_esi_supported = true;

-->

console.log("Was this page rendered with ESI support? ", is_esi_supported);

If the page was processed by the Varnish ESI engine, it will look like this:

var is_esi_supported = true;

console.log("Was this page rendered with ESI support? ", is_esi_supported);

If ESI support was not available, the output will be unchanged. The browser will treat as an unsupported element and will just continue processing the HTML code inside it. The

tag will be treated as a comment and therefore its content will not be evaluated.

You can also encapsulate content inside the

tag :

<!--esi

Below will do an ESI include:

-->

This is the only text on the page if rendered without ESI support.

The following three tags are available:

content</esi:remove>will remove content from the page<!--esiand-->but process the content within them.includes the content of another URL

🚨 By default, Varnish only performs ESI processing on HTML or XML content (e.g. pages). Do not try to do it in JS/CSS files.

🚨 Varnish can only read HTTP (non-SSL) URLs. It does not speak HTTPS/SSL. To work around this, make sure to only use ESI to include resources that use relative paths. For example:

/README.txt

not

https://example.com/README.txt

What can you do with ESI? Here are a few ideas:

- Have different TTLs or cache keys on parts of the same page

- Include parts of dynamic content. For example, instead of asking the backend for the GeoIP location through an AJAX request that can’t be cached, embed it with an

esi:include. This is mainly useful if the URL in theesi:include tagis a synthetic response with special logic invcl_recvfor it. - Embed SVG content in HTML without having to process it via PHP

- Do partial caching, or fragment caching, to split a single page into different cacheable parts

- Clear only parts of a page without the need to re-render the whole thing again, for example changing the content of a header without having to clear all pages with that header.

- Have multiple product pages where each product card is an ESI inclusion. You can clear an individual product card without having to clear the product pages. This would also save you from having to look up all the pages that a product is referenced on when you clear the product.

Conclusion

If you managed to get through all this? Give yourself a cookie 🍪

Is VCL your thing? Are you keen to make WordPress faster than seems natural and correct? Why not become part of our team!

Looking for an expert WooCommerce partner to make your site faster? Varnish is just the beginning of what we can do – contact us.